How to detect HHH000104 issues with hibernate.query.fail_on_pagination_over_collection_fetch

Imagine having a tool that can automatically detect JPA and Hibernate performance issues. Wouldn’t that be just awesome?

Well, Hypersistence Optimizer is that tool! And it works with Spring Boot, Spring Framework, Jakarta EE, Java EE, Quarkus, or Play Framework.

So, enjoy spending your time on the things you love rather than fixing performance issues in your production system on a Saturday night!

Introduction

Recently, I noticed the hibernate.query.fail_on_pagination_over_collection_fetch configuration property that was introduced in Hibernate 5.2, and I had absolutely no idea it can be used to prevent the HHH000104 Hibernate issues.

As previously explained, if you want to overcome the “HHH000104: firstResult/maxResults specified with collection fetch; applying in memory!” issue, you have to either use 2 queries or a window function to fetch and limit the number of parent records while making sure you always fetch all their associated child entities.

Even if the HHH000104 issue is logged as a warning message, it might pass unnoticed when the amount of data is fairly low, only to become a significant performance issue when data starts accumulating.

In this article, I’m going to explain why you should always activate the hibernate.query.fail_on_pagination_over_collection_fetch configuration property and, instead of a warning message, you will get an exception that’s unlikely to go unnoticed.

Fetching an entity collection with pagination

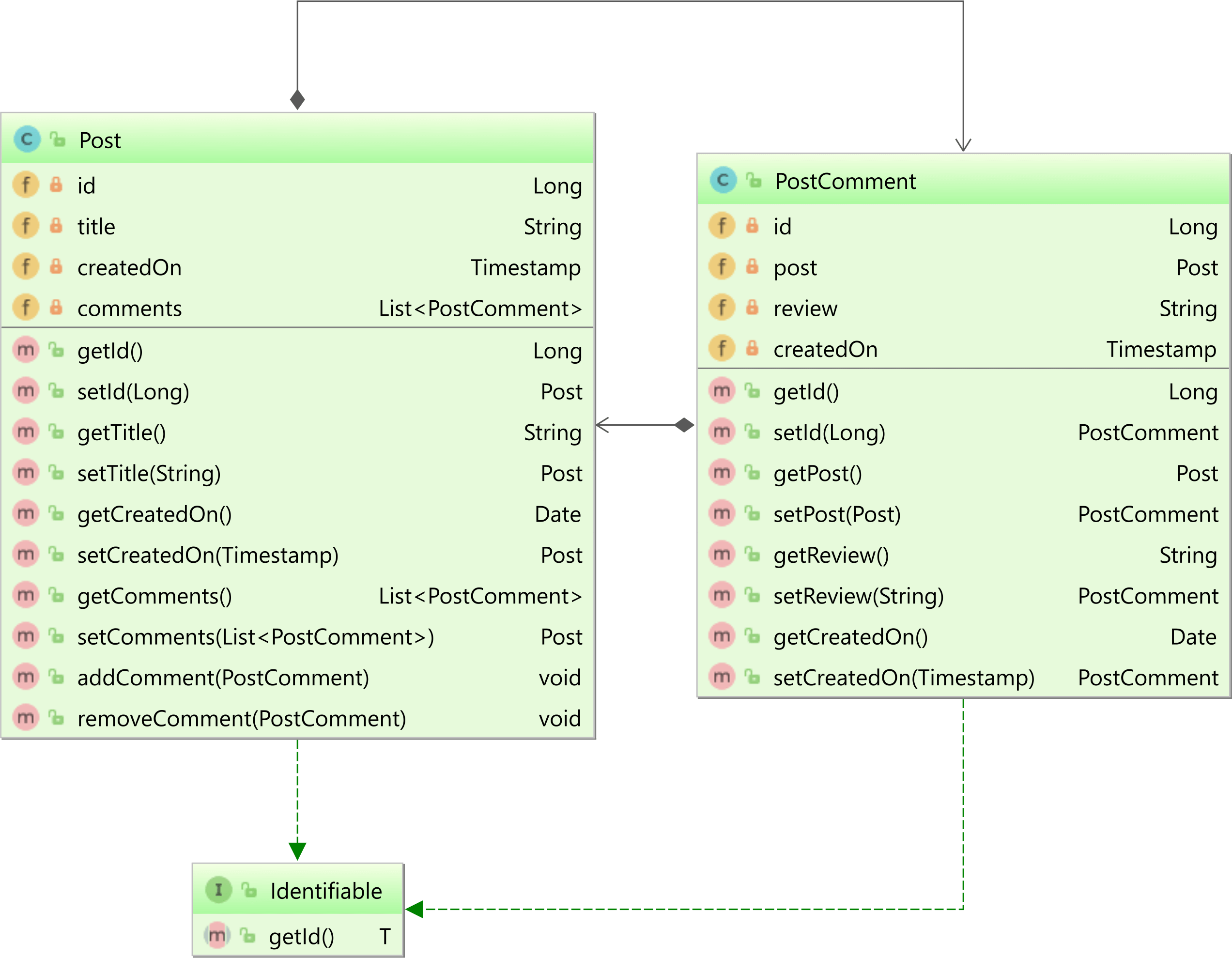

Considering we have the following Post and PostComment entities which form a bidirectional one-to-many association:

We want to fetch 5 Post entities along with all their associated PostComment child entities while filtering the title property of the parent Post entities, so we write the following JPQL query:

List<Post> posts = entityManager.createQuery("""

select p

from Post p

left join fetch p.comments

where p.title like :titlePattern

order by p.createdOn, p.id

""", Post.class)

.setParameter(

"titlePattern",

"High-Performance Java Persistence %"

)

.setMaxResults(5)

.getResultList();

assertEquals(5, posts.size());

assertArrayEquals(

LongStream.rangeClosed(1, 5).toArray(),

posts.stream().mapToLong(Post::getId).toArray()

);

If we run the JPQL query above, you will see that only the first 5 Post entities are going to be returned.

However, if you take a look at the log, you will see the following output:

WARN [main]: o.h.h.i.a.QueryTranslatorImpl -

HHH000104: firstResult/maxResults specified with collection fetch; applying in memory!

DEBUG [main]: n.t.d.l.SLF4JQueryLoggingListener -

Query:["

SELECT

p.id, p.created_on, p.title,

pc.id, pc.post_id, pc.created_on, pc.review

FROM post p

LEFT OUTER JOIN post_comment pc

ON p.id = pc.post_id

WHERE p.title LIKE ?

ORDER BY p.created_on

"],

Params:[(

'High-Performance Java Persistence %'

)]

The first thing to notice is the HHH000104 warning message which indicates that the pagination was done in-memory. The SQL statement confirms this as there is no LIMIT clause used to restrict the result set size.

If there are 100 post records matching the provided title pattern and each post has around 50 comments, then this result set will contain 5000 records that will be fetched by Hibernate, only to be discarded after reaching the provided threshold of 5 Post entities.

Enabling the hibernate.query.fail_on_pagination_over_collection_fetch configuration

Since Hibernate ORM 5.2.13, you can now enable the hibernate.query.fail_on_pagination_over_collection_fetch configuration property as follows:

spring.jpa.properties.hibernate.query.fail_on_pagination_over_collection_fetch=true

This way, when running the previous JPQL query:

try {

entityManager.createQuery("""

select p

from Post p

left join fetch p.comments

where p.title like :titlePattern

order by p.createdOn, p.id

""", Post.class)

.setParameter(

"titlePattern",

"High-Performance Java Persistence %"

)

.setMaxResults(5)

.getResultList();

fail("Should have thrown Exception");

} catch (Exception e) {

assertTrue(

e.getMessage().contains(

"In memory pagination was about to be applied"

)

);

}

A HibernateException is thrown instead of just logging a warning message. This way, you are going to get instant feedback of these in-memory pagination issues and address them long before they hit production systems.

This setting is not just for JPQL queries, being applied to Criteria API queries as well.

Writing JPA Criteria API queries is not very easy. The Codota IDE plugin can guide you on how to write such queries, therefore increasing your productivity.

For more details about how you can use Codota to speed up the process of writing Criteria API queries, check out this article.

try {

CriteriaBuilder builder = entityManager.getCriteriaBuilder();

CriteriaQuery<Post> criteria = builder.createQuery(Post.class);

Root<Post> post = criteria.from(Post.class);

post.fetch(Post_.comments);

ParameterExpression<String> parameterExpression = builder.parameter(String.class);

criteria.where(

builder.like(

post.get(Post_.title),

parameterExpression

)

)

.orderBy(

builder.asc(

post.get(Post_.createdOn)

)

);

entityManager

.createQuery(criteria)

.setParameter(

parameterExpression,

"High-Performance Java Persistence %"

)

.setMaxResults(5)

.getResultList();

fail("Should have thrown Exception");

} catch (Exception e) {

assertTrue(

e.getMessage().contains(

"In memory pagination was about to be applied"

)

);

}

Cool, right?

I'm running an online workshop on the 20-21 and 23-24 of November about High-Performance Java Persistence.

If you enjoyed this article, I bet you are going to love my Book and Video Courses as well.

Conclusion

The hibernate.query.fail_on_pagination_over_collection_fetch configuration property is very useful and you should definitely have it enabled if you are using a Hibernate ORM version that’s greater than 5.2.13.