How does Hibernate READ_WRITE CacheConcurrencyStrategy work

Imagine having a tool that can automatically detect JPA and Hibernate performance issues. Wouldn’t that be just awesome?

Well, Hypersistence Optimizer is that tool! And it works with Spring Boot, Spring Framework, Jakarta EE, Java EE, Quarkus, or Play Framework.

So, enjoy spending your time on the things you love rather than fixing performance issues in your production system on a Saturday night!

Introduction

In my previous post, I introduced the NONSTRICT_READ_WRITE second-level cache concurrency mechanism. In this article, I am going to continue this topic with the READ_WRITE strategy.

Write-through caching

NONSTRICT_READ_WRITE is a read-through caching strategy and updates end-up invalidating cache entries. As simple as this strategy may be, the performance drops with the increase of write operations. A write-through cache strategy is better choice for write-intensive applications, since cache entries can be updated rather than being discarded.

Because the database is the system of record and database operations are wrapped inside physical transactions the cache can either be updated synchronously (like it’s the case of the TRANSACTIONAL cache concurrency strategy) or asynchronously (right after the database transaction is committed).

The READ_WRITE strategy is an asynchronous cache concurrency mechanism and to prevent data integrity issues (e.g. stale cache entries), it uses a locking mechanism that provides unit-of-work isolation guarantees.

Inserting data

Because persisted entities are uniquely identified (each entity being assigned to a distinct database row), the newly created entities get cached right after the database transaction is committed:

@Override

public boolean afterInsert(

Object key, Object value, Object version)

throws CacheException {

region().writeLock( key );

try {

final Lockable item =

(Lockable) region().get( key );

if ( item == null ) {

region().put( key,

new Item( value, version,

region().nextTimestamp()

)

);

return true;

}

else {

return false;

}

}

finally {

region().writeUnlock( key );

}

}

For an entity to be cached upon insertion, it must use a SEQUENCE generator, the cache being populated by the EntityInsertAction:

@Override

public void doAfterTransactionCompletion(boolean success,

SessionImplementor session)

throws HibernateException {

final EntityPersister persister = getPersister();

if ( success && isCachePutEnabled( persister,

getSession() ) ) {

final CacheKey ck = getSession()

.generateCacheKey(

getId(),

persister.getIdentifierType(),

persister.getRootEntityName() );

final boolean put = cacheAfterInsert(

persister, ck );

}

}

postCommitInsert( success );

}

The IDENTITY generator doesn’t play well with the transactional write-behind first-level cache design, so the associated EntityIdentityInsertAction doesn’t cache newly inserted entries (at least until HHH-7964 is fixed).

Theoretically, between the database transaction commit and the second-level cache insert, one concurrent transaction might load the newly created entity, therefore triggering a cache insert. Although possible, the cache synchronization lag is very short and if a concurrent transaction is interleaved, it only makes the other transaction hit the database instead of loading the entity from the cache.

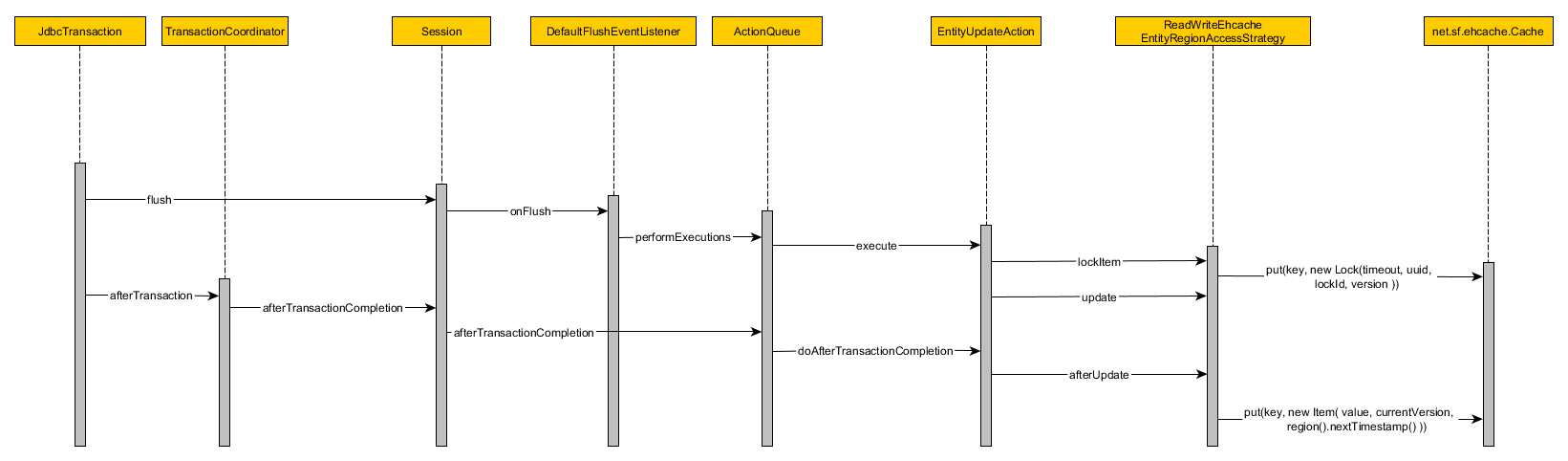

Updating data

While inserting entities is a rather simple operation, for updates, we need to synchronize both the database and the cache entry. The READ_WRITE concurrency strategy employs a locking mechanism to ensure data integrity:

- The Hibernate Transaction commit procedure triggers a Session flush

- The EntityUpdateAction replaces the current cache entry with a Lock object

- The update method is used for synchronous cache updates so it doesn’t do anything when using an asynchronous cache concurrency strategy, like READ_WRITE

- After the database transaction is committed, the after-transaction-completion callbacks are called

- The EntityUpdateAction calls the afterUpdate method of the EntityRegionAccessStrategy

- The ReadWriteEhcacheEntityRegionAccessStrategy replaces the Lock entry with an actual Item, encapsulating the entity dissembled state

Deleting data

Deleting entities is similar to the update process, as we can see from the following sequence diagram:

After an entity is deleted, its associated second-level cache entry will be replaced by a Lock object, that’s making any subsequent request to read from the database instead of using the cache entry.

Locking constructs

Both the Item and the Lock classes inherit from the Lockable type and each of these two has a specific policy for allowing a cache entry to be read or written.

The READ_WRITE Lock object

The Lock class defines the following methods:

@Override

public boolean isReadable(long txTimestamp) {

return false;

}

@Override

public boolean isWriteable(long txTimestamp,

Object newVersion, Comparator versionComparator) {

if ( txTimestamp > timeout ) {

// if timedout then allow write

return true;

}

if ( multiplicity > 0 ) {

// if still locked then disallow write

return false;

}

return version == null

? txTimestamp > unlockTimestamp

: versionComparator.compare( version,

newVersion ) < 0;

}

- A Lock object doesn’t allow reading the cache entry, so any subsequent request must go to the database

- If the current Session creation timestamp is greater than the Lock timeout threshold, the cache entry is allowed to be written

- If at least one Session has managed to lock this entry, any write operation is forbidden

- A Lock entry allows writing if the incoming entity state has incremented its version or the current Session creation timestamp is greater than the current entry unlocking timestamp

The READ_WRITE Item object

The Item class defines the following read/write access policy:

@Override

public boolean isReadable(long txTimestamp) {

return txTimestamp > timestamp;

}

@Override

public boolean isWriteable(long txTimestamp,

Object newVersion, Comparator versionComparator) {

return version != null && versionComparator

.compare( version, newVersion ) < 0;

}

- An Item is readable only from a Session that’s been started after the cache entry creation time

- A Item entry allows writing only if the incoming entity state has incremented its version

Cache entry concurrency control

These concurrency control mechanism are invoked when saving and reading the underlying cache entries.

The cache entry is read when the ReadWriteEhcacheEntityRegionAccessStrategy get method is called:

public final Object get(Object key, long txTimestamp)

throws CacheException {

readLockIfNeeded( key );

try {

final Lockable item =

(Lockable) region().get( key );

final boolean readable =

item != null &&

item.isReadable( txTimestamp );

if ( readable ) {

return item.getValue();

}

else {

return null;

}

}

finally {

readUnlockIfNeeded( key );

}

}

The cache entry is written by the ReadWriteEhcacheEntityRegionAccessStrategy putFromLoad method:

public final boolean putFromLoad(

Object key,

Object value,

long txTimestamp,

Object version,

boolean minimalPutOverride)

throws CacheException {

region().writeLock( key );

try {

final Lockable item =

(Lockable) region().get( key );

final boolean writeable =

item == null ||

item.isWriteable(

txTimestamp,

version,

versionComparator );

if ( writeable ) {

region().put(

key,

new Item(

value,

version,

region().nextTimestamp()

)

);

return true;

}

else {

return false;

}

}

finally {

region().writeUnlock( key );

}

}

Timing out

If the database operation fails, the current cache entry holds a Lock object and it cannot rollback to its previous Item state. For this reason, the Lock must timeout to allow the cache entry to be replaced by an actual Item object. The EhcacheDataRegion defines the following timeout property:

private static final String CACHE_LOCK_TIMEOUT_PROPERTY =

"net.sf.ehcache.hibernate.cache_lock_timeout";

private static final int DEFAULT_CACHE_LOCK_TIMEOUT = 60000;

Unless we override the net.sf.ehcache.hibernate.cache_lock_timeout property, the default timeout is 60 seconds:

final String timeout = properties.getProperty(

CACHE_LOCK_TIMEOUT_PROPERTY,

Integer.toString( DEFAULT_CACHE_LOCK_TIMEOUT )

);

The following test will emulate a failing database transaction, so we can observe how the READ_WRITE cache only allows writing after the timeout threshold expires. First, we are going to lower the timeout value, to reduce the cache freezing period:

properties.put(

"net.sf.ehcache.hibernate.cache_lock_timeout",

String.valueOf(250));

We’ll use a custom interceptor to manually rollback the currently running transaction:

@Override

protected Interceptor interceptor() {

return new EmptyInterceptor() {

@Override

public void beforeTransactionCompletion(

Transaction tx) {

if(applyInterceptor.get()) {

tx.rollback();

}

}

};

}

The following routine will test the lock timeout behavior:

try {

doInTransaction(session -> {

Repository repository = (Repository)

session.get(Repository.class, 1L);

repository.setName("High-Performance Hibernate");

applyInterceptor.set(true);

});

} catch (Exception e) {

LOGGER.info("Expected", e);

}

applyInterceptor.set(false);

AtomicReference<Object> previousCacheEntryReference =

new AtomicReference<>();

AtomicBoolean cacheEntryChanged = new AtomicBoolean();

while (!cacheEntryChanged.get()) {

doInTransaction(session -> {

boolean entryChange;

session.get(Repository.class, 1L);

try {

Object previousCacheEntry =

previousCacheEntryReference.get();

Object cacheEntry =

getCacheEntry(Repository.class, 1L);

entryChange = previousCacheEntry != null &&

previousCacheEntry != cacheEntry;

previousCacheEntryReference.set(cacheEntry);

LOGGER.info("Cache entry {}",

ToStringBuilder.reflectionToString(

cacheEntry));

if(!entryChange) {

sleep(100);

} else {

cacheEntryChanged.set(true);

}

} catch (IllegalAccessException e) {

LOGGER.error("Error accessing Cache", e);

}

});

}

Running this test generates the following output:

select

readwritec0_.id as id1_0_0_,

readwritec0_.name as name2_0_0_,

readwritec0_.version as version3_0_0_

from

repository readwritec0_

where

readwritec0_.id=1

update

repository

set

name='High-Performance Hibernate',

version=1

where

id=1

and version=0

JdbcTransaction - rolled JDBC Connection

select

readwritec0_.id as id1_0_0_,

readwritec0_.name as name2_0_0_,

readwritec0_.version as version3_0_0_

from

repository readwritec0_

where

readwritec0_.id = 1

Cache entry net.sf.ehcache.Element@3f9a0805[

key=ReadWriteCacheConcurrencyStrategyWithLockTimeoutTest$Repository#1,

value=Lock Source-UUID:ac775350-3930-4042-84b8-362b64c47e4b Lock-ID:0,

version=1,

hitCount=3,

timeToLive=120,

timeToIdle=120,

lastUpdateTime=1432280657865,

cacheDefaultLifespan=true,id=0

]

Wait 100 ms!

JdbcTransaction - committed JDBC Connection

select

readwritec0_.id as id1_0_0_,

readwritec0_.name as name2_0_0_,

readwritec0_.version as version3_0_0_

from

repository readwritec0_

where

readwritec0_.id = 1

Cache entry net.sf.ehcache.Element@3f9a0805[

key=ReadWriteCacheConcurrencyStrategyWithLockTimeoutTest$Repository#1,

value=Lock Source-UUID:ac775350-3930-4042-84b8-362b64c47e4b Lock-ID:0,

version=1,

hitCount=3,

timeToLive=120,

timeToIdle=120,

lastUpdateTime=1432280657865,

cacheDefaultLifespan=true,

id=0

]

Wait 100 ms!

JdbcTransaction - committed JDBC Connection

select

readwritec0_.id as id1_0_0_,

readwritec0_.name as name2_0_0_,

readwritec0_.version as version3_0_0_

from

repository readwritec0_

where

readwritec0_.id = 1

Cache entry net.sf.ehcache.Element@305f031[

key=ReadWriteCacheConcurrencyStrategyWithLockTimeoutTest$Repository#1,

value=org.hibernate.cache.ehcache.internal.strategy.AbstractReadWriteEhcacheAccessStrategy$Item@592e843a,

version=1,

hitCount=1,

timeToLive=120,

timeToIdle=120,

lastUpdateTime=1432280658322,

cacheDefaultLifespan=true,

id=0

]

JdbcTransaction - committed JDBC Connection

- The first transaction tries to update an entity, so the associated second-level cache entry is locked prior to committing the transaction.

- The first transaction fails and it gets rolled back

- The lock is being held, so the next two successive transactions are going to the database, without replacing the Lock entry with the current loaded database entity state

- After the Lock timeout period expires, the third transaction can finally replace the Lock with an Item cache entry (holding the entity disassembled hydrated state)

I'm running an online workshop on the 20-21 and 23-24 of November about High-Performance Java Persistence.

If you enjoyed this article, I bet you are going to love my Book and Video Courses as well.

Conclusion

The READ_WRITE concurrency strategy offers the benefits of a write-through caching mechanism, but you need to understand it’s inner workings to decide if it’s good fit for your current project data access requirements.

For heavy write contention scenarios, the locking constructs will make other concurrent transactions hit the database, so you must decide if a synchronous cache concurrency strategy is better suited in this situation.